The km-scale cloud by DKRZ#

The km-scale cloud formerly known as EERIE cloud addresses data users who cannot work on DKRZ´s High Performance Computer Levante next to the data. It provides open, efficient, Zarr-based access to High-resolution ESM datasets produced by

projects like EERIE and NextGEMs

models like ICON and IFS-FESOM.

Whether originally stored as Zarr, NetCDF, or GRIB, the km-scale cloud delivers - without rewriting, relocating, or converting - byte ranges of the original files as Zarr chunks. In addition, it incorporates Dask for on-demand, server-side data reduction tasks — such as compression and the computation of summary statistics. This combination makes it practical and scalable to work with petabyte-scale climate data even to users with limited compute resources. Integrated support for catalog tools like STAC (SpatioTemporal Asset Catalog) and intake, organized in a semantic hierarchy, allows users to discover, query, and subset data efficiently through standardized, interoperable interfaces.

This is enabled by the python package cloudify described in detail in Wachsmann, 2025.

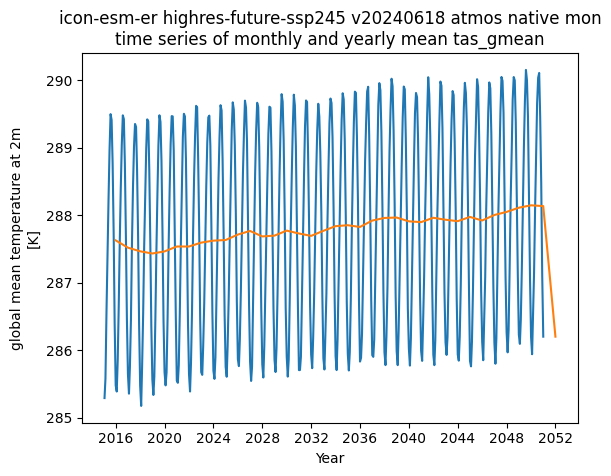

Show case: Time series quickplot for a ICON simulation#

import xarray as xr

dataset_id="icon-esm-er.highres-future-ssp245.v20240618.atmos.native.mon"

ds=xr.open_dataset(

f"https://km-scale-cloud.dkrz.de/datasets/{dataset_id}/kerchunk",

engine="zarr",

chunks="auto",

zarr_format=2,

)

import matplotlib.pyplot as plt

var="tas_gmean"

vmean = ds[var].squeeze()

vmean_yr = vmean.resample(time="YE").mean()

vmean.plot()

vmean_yr.plot()

plt.title(" ".join(dataset_id.split(".")) + f"\ntime series of monthly and yearly mean {var}")

plt.xlabel("Year")

Text(0.5, 0, 'Year')

Km-scale datasets and how to approach them#

The km-scale-cloud provides many endpoints (URIs) with different features. Under this link, all available endpoints are documented.

Helpful dataset endpoints#

A key endpoint is the /datasets endpoint which provides a simple list of datasets accessible through the km-scale-cloud. We can use it like:

import requests

kmscale_uri = "https://km-scale-cloud.dkrz.de"

kmscale_datasets_uri=kmscale_uri+"/datasets"

kmscale_datasets=requests.get(kmscale_datasets_uri).json()

print(f"The km-scale-cloud provides {len(kmscale_datasets)} datasests such as:")

print(kmscale_datasets[0])

The km-scale-cloud provides 571 datasests such as:

cosmo-rea-1hrPt_atmos

For each dataset, a Xarray-dataset view endpoint exists which can be helpful to get an overview about the content of the dataset. The endpoint is constructed like datasets/dataset_id/, e.g.:

dataset_pattern="atmos.native.mon"

global_atm_mon_mean_ds_name=next(

a for a in kmscale_datasets if dataset_pattern in a

)

print(

"An available atmospheric global mean monthly mean dataset is: "+

global_atm_mon_mean_ds_name

)

global_atm_mon_mean_ds_uri=kmscale_datasets_uri+"/"+global_atm_mon_mean_ds_name

An available atmospheric global mean monthly mean dataset is: icon-epoc.control-1990.v20250325.atmos.native.mon

The key endpoint is the default Zarr-endpoint constructed like datasets/DATASET_ID/kerchunk. Such an address can be used by any tool that can read Zarr over http. E.g. With Xarray:

xr.open_dataset(

global_atm_mon_mean_ds_uri+"/kerchunk",

engine="zarr"

)

<xarray.Dataset> Size: 30kB

Dimensions: (time: 420, lat: 1, lon: 1)

Coordinates:

* time (time) datetime64[ns] 3kB 1990-02-01 ... 2025-01-01

* lat (lat) float64 8B 0.0

* lon (lon) float64 8B 0.0

Data variables: (12/16)

duphyvi_gmean (time, lat, lon) float32 2kB ...

evap_gmean (time, lat, lon) float32 2kB ...

fwfoce_gmean (time, lat, lon) float32 2kB ...

kedisp_gmean (time, lat, lon) float32 2kB ...

prec_gmean (time, lat, lon) float32 2kB ...

radbal_gmean (time, lat, lon) float32 2kB ...

... ...

tas_gmean (time, lat, lon) float32 2kB ...

udynvi_gmean (time, lat, lon) float32 2kB ...

ufcs_gmean (time, lat, lon) float32 2kB ...

ufts_gmean (time, lat, lon) float32 2kB ...

ufvs_gmean (time, lat, lon) float32 2kB ...

uphybal_gmean (time, lat, lon) float32 2kB ...

Attributes:

CDI: Climate Data Interface version 2.4.0 (https://mpimet.mpg.de...

Conventions: CF-1.6

comment: Helmuth Haak (shared) (b383127) on l30288 (Linux 4.18.0-477...

history: ./icon at 20241025 152041

institution: Max Planck Institute for Meteorology/Deutscher Wetterdienst

references: see MPIM/DWD publications

source: version: 2024.10; revision: icon-2024.10-5-g59df2b1543c98c8...

title: ICON simulation- time: 420

- lat: 1

- lon: 1

- time(time)datetime64[ns]1990-02-01 ... 2025-01-01

- axis :

- T

- standard_name :

- time

array(['1990-02-01T00:00:00.000000000', '1990-03-01T00:00:00.000000000', '1990-04-01T00:00:00.000000000', ..., '2024-11-01T00:00:00.000000000', '2024-12-01T00:00:00.000000000', '2025-01-01T00:00:00.000000000'], shape=(420,), dtype='datetime64[ns]') - lat(lat)float640.0

- axis :

- Y

- long_name :

- latitude

- standard_name :

- latitude

- units :

- degrees_north

array([0.])

- lon(lon)float640.0

- axis :

- X

- long_name :

- longitude

- standard_name :

- longitude

- units :

- degrees_east

array([0.])

- duphyvi_gmean(time, lat, lon)float32...

- code :

- 255

- long_name :

- mean vertically integrated moist internal energy change by physics

- standard_name :

- duphyvi_gmean

- units :

- J m-2

[420 values with dtype=float32]

- evap_gmean(time, lat, lon)float32...

- code :

- 255

- long_name :

- global mean evaporation flux

- standard_name :

- evap_gmean

- units :

- kg m-2 s-1

[420 values with dtype=float32]

- fwfoce_gmean(time, lat, lon)float32...

- code :

- 255

- long_name :

- mean surface freshwater flux over ocean surface

- standard_name :

- fwfoce_gmean

- units :

- kg m-2 s-1

[420 values with dtype=float32]

- kedisp_gmean(time, lat, lon)float32...

- code :

- 255

- long_name :

- mean vert. integr. dissip. kin. energy

- standard_name :

- kedisp_gmean

- units :

- W m-2

[420 values with dtype=float32]

- prec_gmean(time, lat, lon)float32...

- code :

- 255

- long_name :

- global mean precipitation flux

- standard_name :

- prec_gmean

- units :

- kg m-2 s-1

[420 values with dtype=float32]

- radbal_gmean(time, lat, lon)float32...

- code :

- 255

- long_name :

- global mean net radiative flux into atmosphere

- standard_name :

- radbal_gmean

- units :

- W m-2

[420 values with dtype=float32]

- radtop_gmean(time, lat, lon)float32...

- code :

- 255

- long_name :

- global mean toa net total radiation

- standard_name :

- radtop_gmean

- units :

- W m-2

[420 values with dtype=float32]

- rlut_gmean(time, lat, lon)float32...

- code :

- 255

- long_name :

- global mean toa outgoing longwave radiation

- standard_name :

- rlut_gmean

- units :

- W m-2

[420 values with dtype=float32]

- rsdt_gmean(time, lat, lon)float32...

- code :

- 255

- long_name :

- global mean toa incident shortwave radiation

- standard_name :

- rsdt_gmean

- units :

- W m-2

[420 values with dtype=float32]

- rsut_gmean(time, lat, lon)float32...

- code :

- 255

- long_name :

- global mean toa outgoing shortwave radiation

- standard_name :

- rsut_gmean

- units :

- W m-2

[420 values with dtype=float32]

- tas_gmean(time, lat, lon)float32...

- code :

- 255

- long_name :

- global mean temperature at 2m

- standard_name :

- tas_gmean

- units :

- K

[420 values with dtype=float32]

- udynvi_gmean(time, lat, lon)float32...

- code :

- 255

- long_name :

- mean vertically integrated moist internal energy after dynamics

- standard_name :

- udynvi_gmean

- units :

- J m-2

[420 values with dtype=float32]

- ufcs_gmean(time, lat, lon)float32...

- code :

- 255

- long_name :

- mean energy flux at surface from condensate

- standard_name :

- ufcs_gmean

- units :

- W m-2

[420 values with dtype=float32]

- ufts_gmean(time, lat, lon)float32...

- code :

- 255

- long_name :

- mean energy flux at surface from thermal exchange

- standard_name :

- ufts_gmean

- units :

- W m-2

[420 values with dtype=float32]

- ufvs_gmean(time, lat, lon)float32...

- code :

- 255

- long_name :

- mean energy flux at surface from vapor exchange

- standard_name :

- ufvs_gmean

- units :

- W m-2

[420 values with dtype=float32]

- uphybal_gmean(time, lat, lon)float32...

- code :

- 255

- long_name :

- mean energy balance in aes physics

- standard_name :

- uphybal_gmean

- units :

- W m-2

[420 values with dtype=float32]

- CDI :

- Climate Data Interface version 2.4.0 (https://mpimet.mpg.de/cdi)

- Conventions :

- CF-1.6

- comment :

- Helmuth Haak (shared) (b383127) on l30288 (Linux 4.18.0-477.58.1.el8_8.x86_64 x86_64)

- history :

- ./icon at 20241025 152041

- institution :

- Max Planck Institute for Meteorology/Deutscher Wetterdienst

- references :

- see MPIM/DWD publications

- source :

- version: 2024.10; revision: icon-2024.10-5-g59df2b1543c98c80fbd96124c3f9b587a4680d19-dirty; URL: git@gitlab.dkrz.de:icon/icon-mpim.git

- title :

- ICON simulation

The Datatree: All in one Zarr-group#

Note: xarray>=2025.7.1 required and evolving fast.

Opening Zarr and representing it in Xarray does not coast much resources. It is fast and small in memory. A strategy to work with multiple datasets is therefore to just open everything.

We can access to the full >10PB of the km-scale-cloud with only using xarray by applying the following code:

dt = xr.open_datatree(

"https://km-scale-cloud.dkrz.de/datasets",

engine="zarr",

zarr_format=2,

chunks=None,

create_default_indexes=False,

decode_cf=False,

)

The dt object is a Xarray Datatree. This datatree object allows you to browse and discover the full content of the km-scale-cloud similar to the functionality of intake but without requiring an additional tool.

The workflow using the datatree involves the following steps:

filter: subset the tree so that it only includes the datasets you want. You can apply any customized function using the datasets as input. In the following example, we look for a specific dataset name.chunk,decode_cf: in case you need coordinate values for subsetting or in case you are interested in more than one chunk, better use these functions to allow lazy access on coordinates.Instead of writing loops over a list or a dictionary of datasets, you can now use the

.map_over_datasetsfunction to apply a function to all datasets at once.

path_filter="s2024-08-10"

filtered_tree=dt.filter(lambda ds: ds if path_filter in ds.path else None)

display(filtered_tree)

<xarray.DataTree> Group: /

filtered_tree_chunked=filtered_tree.chunk()

filtered_tree_chunked_decoded=filtered_tree_chunked.map_over_datasets(

lambda ds: xr.decode_cf(ds) if "time" in ds else None

)

Any .compute() or .load() will trigger data retrieval. Thus, make sure you first subset before you download.

The .nbytes object shows you how much uncompressed data will be loaded to your memory.

filtered_tree_chunked_decoded.nbytes/1024**2

0.0

time_sel="2024-08-10"

var="tas"

filtered_tree_chunked_decoded_subsetted=filtered_tree_chunked_decoded.map_over_datasets(

lambda ds: ds[[var]].sel(time=time_sel) if all(a in ds for a in [var,"time"]) else None

).prune()

print(filtered_tree_chunked_decoded_subsetted.nbytes/1024**2)

0.0

Before running .load(), add all lazy functions to the dask´s taskgraph:

dt_workflow=filtered_tree_chunked_decoded_subsetted.map_over_datasets(lambda ds: ds.mean(dim="time") if "time" in ds else None)

%%time

ds_mean=dt_workflow.compute().leaves[0].to_dataset()

ds_mean

CPU times: user 407 μs, sys: 0 ns, total: 407 μs

Wall time: 439 μs

<xarray.Dataset> Size: 0B

Dimensions: ()

Data variables:

*empty*STAC interface#

The most interoperable catalog interface for the km-scale-cloud is with STAC (SpatioTemporal Asset Catalogs). You can use any stac-browser implementation to browse and discover the dynamically created km-scale cloud collection with all datasets linked as dynamic items.

For programmatic access, follow this notebook.

import pystac

kmscale_collection_uri="https://km-scale-cloud.dkrz.de/stac-collection-all.json"

kmscale_collection=pystac.Collection.from_file(kmscale_collection_uri)

kmscale_collection

- type "Collection"

- id "eerie-cloud-all"

- stac_version "1.1.0"

- description " # Items of the eerie.cloud DKRZ hosts a data server named ‘eerie.cloud’ for global high resolution Earth System Model simulation output stored at the German Climate Computing Center (DKRZ). This was developped within the EU project EERIE. Eerie.cloud makes use of the python package xpublish. Xpublish is a plugin for xarray (Hoyer, 2023) which is widely used in the Earth System Science community. It serves ESM output formatted as zarr (Miles, 2020) via a RestAPI based on FastAPI. Served in this way, the data imitates cloud-native data (Abernathey, 2021) and features many capabilities of cloud-optimized data. [Imprint](https://www.dkrz.de/en/about-en/contact/impressum) and [Privacy Policy](https://www.dkrz.de/en/about-en/contact/en-datenschutzhinweise). "

links[] 574 items

0

- rel "self"

- href "https://km-scale-cloud.dkrz.de/stac-collection-all.json"

- type "application/json"

1

- rel "root"

- href "https://eerie.cloud.dkrz.de/stac-collection-all.json"

- type "application/json"

- title "ESM data from DKRZ in Zarr format"

2

- rel "parent"

- href "https://swift.dkrz.de/v1/dkrz_7fa6baba-db43-4d12-a295-8e3ebb1a01ed/catalogs/stac-catalog-eeriecloud.json"

- type "application/json"

3

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cosmo-rea-1hrPt_atmos/stac"

- type "application/json"

- title "cosmo-rea-1hrPt_atmos"

4

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cosmo-rea-1hrPt_land/stac"

- type "application/json"

- title "cosmo-rea-1hrPt_land"

5

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cosmo-rea-1hrPt_landIce/stac"

- type "application/json"

- title "cosmo-rea-1hrPt_landIce"

6

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cosmo-rea-1hr_atmos/stac"

- type "application/json"

- title "cosmo-rea-1hr_atmos"

7

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cosmo-rea-1hr_land/stac"

- type "application/json"

- title "cosmo-rea-1hr_land"

8

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cosmo-rea-6hr_atmos/stac"

- type "application/json"

- title "cosmo-rea-6hr_atmos"

9

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cosmo-rea-day_atmos/stac"

- type "application/json"

- title "cosmo-rea-day_atmos"

10

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cosmo-rea-day_land/stac"

- type "application/json"

- title "cosmo-rea-day_land"

11

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cosmo-rea-day_landIce/stac"

- type "application/json"

- title "cosmo-rea-day_landIce"

12

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cosmo-rea-mon_atmos/stac"

- type "application/json"

- title "cosmo-rea-mon_atmos"

13

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cosmo-rea-mon_land/stac"

- type "application/json"

- title "cosmo-rea-mon_land"

14

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cosmo-rea-mon_landIce/stac"

- type "application/json"

- title "cosmo-rea-mon_landIce"

15

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/nextgems.IFS_2.8-FESOM_5-production.2D_daily_healpix128_ocean/stac"

- type "application/json"

- title "nextgems.IFS_2.8-FESOM_5-production.2D_daily_healpix128_ocean"

16

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/nextgems.IFS_2.8-FESOM_5-production.2D_daily_healpix512_ocean/stac"

- type "application/json"

- title "nextgems.IFS_2.8-FESOM_5-production.2D_daily_healpix512_ocean"

17

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/nextgems.IFS_2.8-FESOM_5-production.2D_hourly_0.25deg/stac"

- type "application/json"

- title "nextgems.IFS_2.8-FESOM_5-production.2D_hourly_0.25deg"

18

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/nextgems.IFS_2.8-FESOM_5-production.2D_hourly_healpix128/stac"

- type "application/json"

- title "nextgems.IFS_2.8-FESOM_5-production.2D_hourly_healpix128"

19

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/nextgems.IFS_2.8-FESOM_5-production.2D_hourly_healpix2048/stac"

- type "application/json"

- title "nextgems.IFS_2.8-FESOM_5-production.2D_hourly_healpix2048"

20

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/nextgems.IFS_2.8-FESOM_5-production.2D_monthly_0.25deg/stac"

- type "application/json"

- title "nextgems.IFS_2.8-FESOM_5-production.2D_monthly_0.25deg"

21

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/nextgems.IFS_2.8-FESOM_5-production.2D_monthly_healpix128/stac"

- type "application/json"

- title "nextgems.IFS_2.8-FESOM_5-production.2D_monthly_healpix128"

22

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/nextgems.IFS_2.8-FESOM_5-production.2D_monthly_healpix2048/stac"

- type "application/json"

- title "nextgems.IFS_2.8-FESOM_5-production.2D_monthly_healpix2048"

23

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/nextgems.IFS_2.8-FESOM_5-production.3D_daily_healpix128_ocean/stac"

- type "application/json"

- title "nextgems.IFS_2.8-FESOM_5-production.3D_daily_healpix128_ocean"

24

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/nextgems.IFS_2.8-FESOM_5-production.3D_daily_healpix512_ocean/stac"

- type "application/json"

- title "nextgems.IFS_2.8-FESOM_5-production.3D_daily_healpix512_ocean"

25

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/nextgems.IFS_2.8-FESOM_5-production.3D_hourly_0.25deg/stac"

- type "application/json"

- title "nextgems.IFS_2.8-FESOM_5-production.3D_hourly_0.25deg"

26

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/nextgems.IFS_2.8-FESOM_5-production.3D_hourly_0.25deg_snow/stac"

- type "application/json"

- title "nextgems.IFS_2.8-FESOM_5-production.3D_hourly_0.25deg_snow"

27

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/nextgems.IFS_2.8-FESOM_5-production.3D_hourly_healpix128/stac"

- type "application/json"

- title "nextgems.IFS_2.8-FESOM_5-production.3D_hourly_healpix128"

28

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/nextgems.IFS_2.8-FESOM_5-production.3D_hourly_healpix128_snow/stac"

- type "application/json"

- title "nextgems.IFS_2.8-FESOM_5-production.3D_hourly_healpix128_snow"

29

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/nextgems.IFS_2.8-FESOM_5-production.3D_hourly_healpix2048/stac"

- type "application/json"

- title "nextgems.IFS_2.8-FESOM_5-production.3D_hourly_healpix2048"

30

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/nextgems.IFS_2.8-FESOM_5-production.3D_hourly_healpix2048_snow/stac"

- type "application/json"

- title "nextgems.IFS_2.8-FESOM_5-production.3D_hourly_healpix2048_snow"

31

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/nextgems.IFS_2.8-FESOM_5-production-deep-off.2D_daily_healpix128_ocean/stac"

- type "application/json"

- title "nextgems.IFS_2.8-FESOM_5-production-deep-off.2D_daily_healpix128_ocean"

32

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/nextgems.IFS_2.8-FESOM_5-production-deep-off.2D_daily_healpix512_ocean/stac"

- type "application/json"

- title "nextgems.IFS_2.8-FESOM_5-production-deep-off.2D_daily_healpix512_ocean"

33

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/nextgems.IFS_2.8-FESOM_5-production-deep-off.2D_hourly_0.25deg/stac"

- type "application/json"

- title "nextgems.IFS_2.8-FESOM_5-production-deep-off.2D_hourly_0.25deg"

34

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/nextgems.IFS_2.8-FESOM_5-production-deep-off.2D_hourly_healpix128/stac"

- type "application/json"

- title "nextgems.IFS_2.8-FESOM_5-production-deep-off.2D_hourly_healpix128"

35

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/nextgems.IFS_2.8-FESOM_5-production-deep-off.2D_hourly_healpix2048/stac"

- type "application/json"

- title "nextgems.IFS_2.8-FESOM_5-production-deep-off.2D_hourly_healpix2048"

36

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/nextgems.IFS_2.8-FESOM_5-production-deep-off.2D_monthly_0.25deg/stac"

- type "application/json"

- title "nextgems.IFS_2.8-FESOM_5-production-deep-off.2D_monthly_0.25deg"

37

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/nextgems.IFS_2.8-FESOM_5-production-deep-off.2D_monthly_healpix128/stac"

- type "application/json"

- title "nextgems.IFS_2.8-FESOM_5-production-deep-off.2D_monthly_healpix128"

38

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/nextgems.IFS_2.8-FESOM_5-production-deep-off.2D_monthly_healpix2048/stac"

- type "application/json"

- title "nextgems.IFS_2.8-FESOM_5-production-deep-off.2D_monthly_healpix2048"

39

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/nextgems.IFS_2.8-FESOM_5-production-deep-off.3D_daily_healpix128_ocean/stac"

- type "application/json"

- title "nextgems.IFS_2.8-FESOM_5-production-deep-off.3D_daily_healpix128_ocean"

40

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/nextgems.IFS_2.8-FESOM_5-production-deep-off.3D_daily_healpix512_ocean/stac"

- type "application/json"

- title "nextgems.IFS_2.8-FESOM_5-production-deep-off.3D_daily_healpix512_ocean"

41

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/nextgems.IFS_2.8-FESOM_5-production-deep-off.3D_hourly_0.25deg/stac"

- type "application/json"

- title "nextgems.IFS_2.8-FESOM_5-production-deep-off.3D_hourly_0.25deg"

42

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/nextgems.IFS_2.8-FESOM_5-production-deep-off.3D_hourly_0.25deg_snow/stac"

- type "application/json"

- title "nextgems.IFS_2.8-FESOM_5-production-deep-off.3D_hourly_0.25deg_snow"

43

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/nextgems.IFS_2.8-FESOM_5-production-deep-off.3D_hourly_healpix128/stac"

- type "application/json"

- title "nextgems.IFS_2.8-FESOM_5-production-deep-off.3D_hourly_healpix128"

44

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/nextgems.IFS_2.8-FESOM_5-production-deep-off.3D_hourly_healpix128_snow/stac"

- type "application/json"

- title "nextgems.IFS_2.8-FESOM_5-production-deep-off.3D_hourly_healpix128_snow"

45

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/nextgems.IFS_2.8-FESOM_5-production-deep-off.3D_hourly_healpix2048/stac"

- type "application/json"

- title "nextgems.IFS_2.8-FESOM_5-production-deep-off.3D_hourly_healpix2048"

46

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/nextgems.IFS_2.8-FESOM_5-production-deep-off.3D_hourly_healpix2048_snow/stac"

- type "application/json"

- title "nextgems.IFS_2.8-FESOM_5-production-deep-off.3D_hourly_healpix2048_snow"

47

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/orcestra_1250m_2d_hpz12/stac"

- type "application/json"

- title "orcestra_1250m_2d_hpz12"

48

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/orcestra_1250m_3d_hpz12/stac"

- type "application/json"

- title "orcestra_1250m_3d_hpz12"

49

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/era5-dkrz.pressure-level_analysis_daily/stac"

- type "application/json"

- title "era5-dkrz.pressure-level_analysis_daily"

50

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/era5-dkrz.pressure-level_analysis_monthly/stac"

- type "application/json"

- title "era5-dkrz.pressure-level_analysis_monthly"

51

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/era5-dkrz.surface_analysis_daily/stac"

- type "application/json"

- title "era5-dkrz.surface_analysis_daily"

52

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/era5-dkrz.surface_analysis_hourly/stac"

- type "application/json"

- title "era5-dkrz.surface_analysis_hourly"

53

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/era5-dkrz.surface_analysis_monthly/stac"

- type "application/json"

- title "era5-dkrz.surface_analysis_monthly"

54

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/era5-dkrz.surface_forecast_hourly/stac"

- type "application/json"

- title "era5-dkrz.surface_forecast_hourly"

55

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/era5-dkrz.surface_forecast_monthly/stac"

- type "application/json"

- title "era5-dkrz.surface_forecast_monthly"

56

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.MEU-3.CLMcom-Hereon.ERA5.evaluation.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.mon/stac"

- type "application/json"

- title "cordex-cmip6.DD.MEU-3.CLMcom-Hereon.ERA5.evaluation.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.mon"

57

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.MEU-3.CLMcom-Hereon.ERA5.evaluation.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.day/stac"

- type "application/json"

- title "cordex-cmip6.DD.MEU-3.CLMcom-Hereon.ERA5.evaluation.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.day"

58

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.MEU-3.CLMcom-Hereon.ERA5.evaluation.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.1hrPt/stac"

- type "application/json"

- title "cordex-cmip6.DD.MEU-3.CLMcom-Hereon.ERA5.evaluation.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.1hrPt"

59

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.MEU-3.CLMcom-Hereon.ERA5.evaluation.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.fx/stac"

- type "application/json"

- title "cordex-cmip6.DD.MEU-3.CLMcom-Hereon.ERA5.evaluation.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.fx"

60

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.MEU-3.CLMcom-Hereon.ERA5.evaluation.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.1hr/stac"

- type "application/json"

- title "cordex-cmip6.DD.MEU-3.CLMcom-Hereon.ERA5.evaluation.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.1hr"

61

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.MEU-3.CLMcom-Hereon.ERA5.evaluation.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.6hr/stac"

- type "application/json"

- title "cordex-cmip6.DD.MEU-3.CLMcom-Hereon.ERA5.evaluation.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.6hr"

62

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.MEU-3.CLMcom-Hereon.EC-Earth3-Veg.historical.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.mon/stac"

- type "application/json"

- title "cordex-cmip6.DD.MEU-3.CLMcom-Hereon.EC-Earth3-Veg.historical.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.mon"

63

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.MEU-3.CLMcom-Hereon.EC-Earth3-Veg.historical.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.6hrPt/stac"

- type "application/json"

- title "cordex-cmip6.DD.MEU-3.CLMcom-Hereon.EC-Earth3-Veg.historical.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.6hrPt"

64

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.MEU-3.CLMcom-Hereon.EC-Earth3-Veg.historical.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.day/stac"

- type "application/json"

- title "cordex-cmip6.DD.MEU-3.CLMcom-Hereon.EC-Earth3-Veg.historical.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.day"

65

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.MEU-3.CLMcom-Hereon.EC-Earth3-Veg.historical.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.1hrPt/stac"

- type "application/json"

- title "cordex-cmip6.DD.MEU-3.CLMcom-Hereon.EC-Earth3-Veg.historical.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.1hrPt"

66

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.MEU-3.CLMcom-Hereon.EC-Earth3-Veg.historical.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.fx/stac"

- type "application/json"

- title "cordex-cmip6.DD.MEU-3.CLMcom-Hereon.EC-Earth3-Veg.historical.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.fx"

67

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.MEU-3.CLMcom-Hereon.EC-Earth3-Veg.historical.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.dayPt/stac"

- type "application/json"

- title "cordex-cmip6.DD.MEU-3.CLMcom-Hereon.EC-Earth3-Veg.historical.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.dayPt"

68

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.MEU-3.CLMcom-Hereon.EC-Earth3-Veg.historical.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.fxPt/stac"

- type "application/json"

- title "cordex-cmip6.DD.MEU-3.CLMcom-Hereon.EC-Earth3-Veg.historical.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.fxPt"

69

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.MEU-3.CLMcom-Hereon.EC-Earth3-Veg.historical.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.1hr/stac"

- type "application/json"

- title "cordex-cmip6.DD.MEU-3.CLMcom-Hereon.EC-Earth3-Veg.historical.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.1hr"

70

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.MEU-3.CLMcom-Hereon.EC-Earth3-Veg.historical.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.monPt/stac"

- type "application/json"

- title "cordex-cmip6.DD.MEU-3.CLMcom-Hereon.EC-Earth3-Veg.historical.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.monPt"

71

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.MEU-3.CLMcom-Hereon.EC-Earth3-Veg.historical.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.6hr/stac"

- type "application/json"

- title "cordex-cmip6.DD.MEU-3.CLMcom-Hereon.EC-Earth3-Veg.historical.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.6hr"

72

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-BTU.CNRM-ESM2-1.ssp585.r1i1p1f2.ICON-CLM-202407-1-1.v1-r1.mon/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-BTU.CNRM-ESM2-1.ssp585.r1i1p1f2.ICON-CLM-202407-1-1.v1-r1.mon"

73

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-BTU.CNRM-ESM2-1.ssp585.r1i1p1f2.ICON-CLM-202407-1-1.v1-r1.6hrPt/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-BTU.CNRM-ESM2-1.ssp585.r1i1p1f2.ICON-CLM-202407-1-1.v1-r1.6hrPt"

74

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-BTU.CNRM-ESM2-1.ssp585.r1i1p1f2.ICON-CLM-202407-1-1.v1-r1.day/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-BTU.CNRM-ESM2-1.ssp585.r1i1p1f2.ICON-CLM-202407-1-1.v1-r1.day"

75

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-BTU.CNRM-ESM2-1.ssp585.r1i1p1f2.ICON-CLM-202407-1-1.v1-r1.1hrPt/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-BTU.CNRM-ESM2-1.ssp585.r1i1p1f2.ICON-CLM-202407-1-1.v1-r1.1hrPt"

76

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-BTU.CNRM-ESM2-1.ssp585.r1i1p1f2.ICON-CLM-202407-1-1.v1-r1.fx/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-BTU.CNRM-ESM2-1.ssp585.r1i1p1f2.ICON-CLM-202407-1-1.v1-r1.fx"

77

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-BTU.CNRM-ESM2-1.ssp245.r1i1p1f2.ICON-CLM-202407-1-1.v1-r1.mon/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-BTU.CNRM-ESM2-1.ssp245.r1i1p1f2.ICON-CLM-202407-1-1.v1-r1.mon"

78

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-BTU.CNRM-ESM2-1.ssp245.r1i1p1f2.ICON-CLM-202407-1-1.v1-r1.6hrPt/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-BTU.CNRM-ESM2-1.ssp245.r1i1p1f2.ICON-CLM-202407-1-1.v1-r1.6hrPt"

79

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-BTU.CNRM-ESM2-1.ssp245.r1i1p1f2.ICON-CLM-202407-1-1.v1-r1.day/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-BTU.CNRM-ESM2-1.ssp245.r1i1p1f2.ICON-CLM-202407-1-1.v1-r1.day"

80

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-BTU.CNRM-ESM2-1.ssp245.r1i1p1f2.ICON-CLM-202407-1-1.v1-r1.1hrPt/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-BTU.CNRM-ESM2-1.ssp245.r1i1p1f2.ICON-CLM-202407-1-1.v1-r1.1hrPt"

81

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-BTU.CNRM-ESM2-1.ssp245.r1i1p1f2.ICON-CLM-202407-1-1.v1-r1.fx/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-BTU.CNRM-ESM2-1.ssp245.r1i1p1f2.ICON-CLM-202407-1-1.v1-r1.fx"

82

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-BTU.CNRM-ESM2-1.historical.r1i1p1f2.ICON-CLM-202407-1-1.v1-r1.mon/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-BTU.CNRM-ESM2-1.historical.r1i1p1f2.ICON-CLM-202407-1-1.v1-r1.mon"

83

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-BTU.CNRM-ESM2-1.historical.r1i1p1f2.ICON-CLM-202407-1-1.v1-r1.6hrPt/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-BTU.CNRM-ESM2-1.historical.r1i1p1f2.ICON-CLM-202407-1-1.v1-r1.6hrPt"

84

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-BTU.CNRM-ESM2-1.historical.r1i1p1f2.ICON-CLM-202407-1-1.v1-r1.day/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-BTU.CNRM-ESM2-1.historical.r1i1p1f2.ICON-CLM-202407-1-1.v1-r1.day"

85

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-BTU.CNRM-ESM2-1.historical.r1i1p1f2.ICON-CLM-202407-1-1.v1-r1.1hrPt/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-BTU.CNRM-ESM2-1.historical.r1i1p1f2.ICON-CLM-202407-1-1.v1-r1.1hrPt"

86

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-BTU.CNRM-ESM2-1.historical.r1i1p1f2.ICON-CLM-202407-1-1.v1-r1.fx/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-BTU.CNRM-ESM2-1.historical.r1i1p1f2.ICON-CLM-202407-1-1.v1-r1.fx"

87

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-BTU.CNRM-ESM2-1.historical.r1i1p1f2.ICON-CLM-202407-1-1.v1-r1.1hr/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-BTU.CNRM-ESM2-1.historical.r1i1p1f2.ICON-CLM-202407-1-1.v1-r1.1hr"

88

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-BTU.CNRM-ESM2-1.historical.r1i1p1f2.ICON-CLM-202407-1-1.v1-r1.6hr/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-BTU.CNRM-ESM2-1.historical.r1i1p1f2.ICON-CLM-202407-1-1.v1-r1.6hr"

89

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-BTU.CNRM-ESM2-1.ssp126.r1i1p1f2.ICON-CLM-202407-1-1.v1-r1.mon/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-BTU.CNRM-ESM2-1.ssp126.r1i1p1f2.ICON-CLM-202407-1-1.v1-r1.mon"

90

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-BTU.CNRM-ESM2-1.ssp126.r1i1p1f2.ICON-CLM-202407-1-1.v1-r1.6hrPt/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-BTU.CNRM-ESM2-1.ssp126.r1i1p1f2.ICON-CLM-202407-1-1.v1-r1.6hrPt"

91

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-BTU.CNRM-ESM2-1.ssp126.r1i1p1f2.ICON-CLM-202407-1-1.v1-r1.day/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-BTU.CNRM-ESM2-1.ssp126.r1i1p1f2.ICON-CLM-202407-1-1.v1-r1.day"

92

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-BTU.CNRM-ESM2-1.ssp126.r1i1p1f2.ICON-CLM-202407-1-1.v1-r1.1hrPt/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-BTU.CNRM-ESM2-1.ssp126.r1i1p1f2.ICON-CLM-202407-1-1.v1-r1.1hrPt"

93

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-BTU.CNRM-ESM2-1.ssp126.r1i1p1f2.ICON-CLM-202407-1-1.v1-r1.fx/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-BTU.CNRM-ESM2-1.ssp126.r1i1p1f2.ICON-CLM-202407-1-1.v1-r1.fx"

94

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-BTU.CNRM-ESM2-1.ssp126.r1i1p1f2.ICON-CLM-202407-1-1.v1-r1.1hr/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-BTU.CNRM-ESM2-1.ssp126.r1i1p1f2.ICON-CLM-202407-1-1.v1-r1.1hr"

95

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-BTU.CNRM-ESM2-1.ssp126.r1i1p1f2.ICON-CLM-202407-1-1.v1-r1.6hr/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-BTU.CNRM-ESM2-1.ssp126.r1i1p1f2.ICON-CLM-202407-1-1.v1-r1.6hr"

96

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-BTU.CNRM-ESM2-1.ssp370.r1i1p1f2.ICON-CLM-202407-1-1.v1-r1.mon/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-BTU.CNRM-ESM2-1.ssp370.r1i1p1f2.ICON-CLM-202407-1-1.v1-r1.mon"

97

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-BTU.CNRM-ESM2-1.ssp370.r1i1p1f2.ICON-CLM-202407-1-1.v1-r1.6hrPt/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-BTU.CNRM-ESM2-1.ssp370.r1i1p1f2.ICON-CLM-202407-1-1.v1-r1.6hrPt"

98

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-BTU.CNRM-ESM2-1.ssp370.r1i1p1f2.ICON-CLM-202407-1-1.v1-r1.day/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-BTU.CNRM-ESM2-1.ssp370.r1i1p1f2.ICON-CLM-202407-1-1.v1-r1.day"

99

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-BTU.CNRM-ESM2-1.ssp370.r1i1p1f2.ICON-CLM-202407-1-1.v1-r1.1hrPt/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-BTU.CNRM-ESM2-1.ssp370.r1i1p1f2.ICON-CLM-202407-1-1.v1-r1.1hrPt"

100

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-BTU.CNRM-ESM2-1.ssp370.r1i1p1f2.ICON-CLM-202407-1-1.v1-r1.fx/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-BTU.CNRM-ESM2-1.ssp370.r1i1p1f2.ICON-CLM-202407-1-1.v1-r1.fx"

101

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-BTU.CNRM-ESM2-1.ssp370.r1i1p1f2.ICON-CLM-202407-1-1.v1-r1.1hr/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-BTU.CNRM-ESM2-1.ssp370.r1i1p1f2.ICON-CLM-202407-1-1.v1-r1.1hr"

102

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-BTU.CNRM-ESM2-1.ssp370.r1i1p1f2.ICON-CLM-202407-1-1.v1-r1.6hr/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-BTU.CNRM-ESM2-1.ssp370.r1i1p1f2.ICON-CLM-202407-1-1.v1-r1.6hr"

103

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-GERICS.EC-Earth3-Veg.ssp126.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.mon/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-GERICS.EC-Earth3-Veg.ssp126.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.mon"

104

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-GERICS.EC-Earth3-Veg.ssp126.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.6hrPt/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-GERICS.EC-Earth3-Veg.ssp126.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.6hrPt"

105

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-GERICS.EC-Earth3-Veg.ssp126.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.day/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-GERICS.EC-Earth3-Veg.ssp126.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.day"

106

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-GERICS.EC-Earth3-Veg.ssp126.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.1hrPt/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-GERICS.EC-Earth3-Veg.ssp126.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.1hrPt"

107

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-GERICS.EC-Earth3-Veg.ssp126.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.fx/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-GERICS.EC-Earth3-Veg.ssp126.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.fx"

108

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-GERICS.EC-Earth3-Veg.ssp126.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.1hr/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-GERICS.EC-Earth3-Veg.ssp126.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.1hr"

109

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-GERICS.EC-Earth3-Veg.ssp126.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.6hr/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-GERICS.EC-Earth3-Veg.ssp126.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.6hr"

110

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-GERICS.EC-Earth3-Veg.ssp370.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.mon/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-GERICS.EC-Earth3-Veg.ssp370.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.mon"

111

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-GERICS.EC-Earth3-Veg.ssp370.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.6hrPt/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-GERICS.EC-Earth3-Veg.ssp370.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.6hrPt"

112

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-GERICS.EC-Earth3-Veg.ssp370.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.day/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-GERICS.EC-Earth3-Veg.ssp370.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.day"

113

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-GERICS.EC-Earth3-Veg.ssp370.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.1hrPt/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-GERICS.EC-Earth3-Veg.ssp370.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.1hrPt"

114

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-GERICS.EC-Earth3-Veg.ssp370.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.fx/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-GERICS.EC-Earth3-Veg.ssp370.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.fx"

115

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-GERICS.EC-Earth3-Veg.ssp370.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.1hr/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-GERICS.EC-Earth3-Veg.ssp370.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.1hr"

116

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-GERICS.EC-Earth3-Veg.ssp370.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.6hr/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-GERICS.EC-Earth3-Veg.ssp370.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.6hr"

117

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-Hereon.ERA5.evaluation.r1i1p1f1.ICON-CLM-202407-1-1.v1-r2.mon/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-Hereon.ERA5.evaluation.r1i1p1f1.ICON-CLM-202407-1-1.v1-r2.mon"

118

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-Hereon.ERA5.evaluation.r1i1p1f1.ICON-CLM-202407-1-1.v1-r2.6hrPt/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-Hereon.ERA5.evaluation.r1i1p1f1.ICON-CLM-202407-1-1.v1-r2.6hrPt"

119

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-Hereon.ERA5.evaluation.r1i1p1f1.ICON-CLM-202407-1-1.v1-r2.day/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-Hereon.ERA5.evaluation.r1i1p1f1.ICON-CLM-202407-1-1.v1-r2.day"

120

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-Hereon.ERA5.evaluation.r1i1p1f1.ICON-CLM-202407-1-1.v1-r2.1hrPt/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-Hereon.ERA5.evaluation.r1i1p1f1.ICON-CLM-202407-1-1.v1-r2.1hrPt"

121

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-Hereon.ERA5.evaluation.r1i1p1f1.ICON-CLM-202407-1-1.v1-r2.fx/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-Hereon.ERA5.evaluation.r1i1p1f1.ICON-CLM-202407-1-1.v1-r2.fx"

122

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-Hereon.ERA5.evaluation.r1i1p1f1.ICON-CLM-202407-1-1.v1-r2.1hr/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-Hereon.ERA5.evaluation.r1i1p1f1.ICON-CLM-202407-1-1.v1-r2.1hr"

123

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-Hereon.ERA5.evaluation.r1i1p1f1.ICON-CLM-202407-1-1.v1-r2.6hr/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-Hereon.ERA5.evaluation.r1i1p1f1.ICON-CLM-202407-1-1.v1-r2.6hr"

124

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-Hereon.ERA5.evaluation.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.mon/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-Hereon.ERA5.evaluation.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.mon"

125

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-Hereon.ERA5.evaluation.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.6hrPt/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-Hereon.ERA5.evaluation.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.6hrPt"

126

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-Hereon.ERA5.evaluation.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.day/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-Hereon.ERA5.evaluation.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.day"

127

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-Hereon.ERA5.evaluation.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.1hrPt/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-Hereon.ERA5.evaluation.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.1hrPt"

128

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-Hereon.ERA5.evaluation.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.fx/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-Hereon.ERA5.evaluation.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.fx"

129

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-Hereon.ERA5.evaluation.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.1hr/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-Hereon.ERA5.evaluation.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.1hr"

130

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-Hereon.ERA5.evaluation.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.6hr/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-Hereon.ERA5.evaluation.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.6hr"

131

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-Hereon.EC-Earth3-Veg.historical.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.mon/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-Hereon.EC-Earth3-Veg.historical.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.mon"

132

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-Hereon.EC-Earth3-Veg.historical.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.6hrPt/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-Hereon.EC-Earth3-Veg.historical.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.6hrPt"

133

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-Hereon.EC-Earth3-Veg.historical.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.day/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-Hereon.EC-Earth3-Veg.historical.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.day"

134

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-Hereon.EC-Earth3-Veg.historical.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.1hrPt/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-Hereon.EC-Earth3-Veg.historical.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.1hrPt"

135

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-Hereon.EC-Earth3-Veg.historical.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.fx/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-Hereon.EC-Earth3-Veg.historical.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.fx"

136

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-Hereon.EC-Earth3-Veg.historical.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.1hr/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-Hereon.EC-Earth3-Veg.historical.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.1hr"

137

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-Hereon.EC-Earth3-Veg.historical.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.6hr/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-Hereon.EC-Earth3-Veg.historical.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.6hr"

138

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-DWD.MPI-ESM1-2-HR.ssp585.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.mon/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-DWD.MPI-ESM1-2-HR.ssp585.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.mon"

139

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-DWD.MPI-ESM1-2-HR.ssp585.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.6hrPt/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-DWD.MPI-ESM1-2-HR.ssp585.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.6hrPt"

140

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-DWD.MPI-ESM1-2-HR.ssp585.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.day/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-DWD.MPI-ESM1-2-HR.ssp585.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.day"

141

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-DWD.MPI-ESM1-2-HR.ssp585.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.fx/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-DWD.MPI-ESM1-2-HR.ssp585.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.fx"

142

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-DWD.MPI-ESM1-2-HR.ssp585.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.1hr/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-DWD.MPI-ESM1-2-HR.ssp585.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.1hr"

143

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-DWD.MPI-ESM1-2-HR.ssp585.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.6hr/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-DWD.MPI-ESM1-2-HR.ssp585.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.6hr"

144

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-DWD.MPI-ESM1-2-HR.ssp245.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.mon/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-DWD.MPI-ESM1-2-HR.ssp245.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.mon"

145

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-DWD.MPI-ESM1-2-HR.ssp245.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.6hrPt/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-DWD.MPI-ESM1-2-HR.ssp245.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.6hrPt"

146

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-DWD.MPI-ESM1-2-HR.ssp245.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.day/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-DWD.MPI-ESM1-2-HR.ssp245.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.day"

147

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-DWD.MPI-ESM1-2-HR.ssp245.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.fx/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-DWD.MPI-ESM1-2-HR.ssp245.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.fx"

148

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-DWD.MPI-ESM1-2-HR.ssp245.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.1hr/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-DWD.MPI-ESM1-2-HR.ssp245.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.1hr"

149

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-DWD.MPI-ESM1-2-HR.ssp245.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.6hr/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-DWD.MPI-ESM1-2-HR.ssp245.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.6hr"

150

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-DWD.MPI-ESM1-2-HR.historical.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.mon/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-DWD.MPI-ESM1-2-HR.historical.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.mon"

151

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-DWD.MPI-ESM1-2-HR.historical.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.6hrPt/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-DWD.MPI-ESM1-2-HR.historical.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.6hrPt"

152

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-DWD.MPI-ESM1-2-HR.historical.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.day/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-DWD.MPI-ESM1-2-HR.historical.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.day"

153

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-DWD.MPI-ESM1-2-HR.historical.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.fx/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-DWD.MPI-ESM1-2-HR.historical.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.fx"

154

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-DWD.MPI-ESM1-2-HR.historical.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.1hr/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-DWD.MPI-ESM1-2-HR.historical.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.1hr"

155

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-DWD.MPI-ESM1-2-HR.historical.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.6hr/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-DWD.MPI-ESM1-2-HR.historical.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.6hr"

156

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-DWD.MPI-ESM1-2-HR.ssp126.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.mon/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-DWD.MPI-ESM1-2-HR.ssp126.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.mon"

157

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-DWD.MPI-ESM1-2-HR.ssp126.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.6hrPt/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-DWD.MPI-ESM1-2-HR.ssp126.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.6hrPt"

158

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-DWD.MPI-ESM1-2-HR.ssp126.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.day/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-DWD.MPI-ESM1-2-HR.ssp126.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.day"

159

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-DWD.MPI-ESM1-2-HR.ssp126.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.fx/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-DWD.MPI-ESM1-2-HR.ssp126.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.fx"

160

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-DWD.MPI-ESM1-2-HR.ssp126.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.1hr/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-DWD.MPI-ESM1-2-HR.ssp126.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.1hr"

161

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-DWD.MPI-ESM1-2-HR.ssp126.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.6hr/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-DWD.MPI-ESM1-2-HR.ssp126.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.6hr"

162

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-DWD.MPI-ESM1-2-HR.ssp370.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.mon/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-DWD.MPI-ESM1-2-HR.ssp370.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.mon"

163

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-DWD.MPI-ESM1-2-HR.ssp370.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.6hrPt/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-DWD.MPI-ESM1-2-HR.ssp370.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.6hrPt"

164

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-DWD.MPI-ESM1-2-HR.ssp370.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.day/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-DWD.MPI-ESM1-2-HR.ssp370.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.day"

165

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-DWD.MPI-ESM1-2-HR.ssp370.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.fx/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-DWD.MPI-ESM1-2-HR.ssp370.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.fx"

166

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-DWD.MPI-ESM1-2-HR.ssp370.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.1hr/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-DWD.MPI-ESM1-2-HR.ssp370.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.1hr"

167

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-DWD.MPI-ESM1-2-HR.ssp370.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.6hr/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-DWD.MPI-ESM1-2-HR.ssp370.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.6hr"

168

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-KIT.MIROC6.ssp245.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.6hrPt/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-KIT.MIROC6.ssp245.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.6hrPt"

169

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-KIT.MIROC6.ssp245.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.1hrPt/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-KIT.MIROC6.ssp245.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.1hrPt"

170

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-KIT.MIROC6.historical.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.mon/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-KIT.MIROC6.historical.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.mon"

171

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-KIT.MIROC6.historical.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.6hrPt/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-KIT.MIROC6.historical.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.6hrPt"

172

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-KIT.MIROC6.historical.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.day/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-KIT.MIROC6.historical.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.day"

173

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-KIT.MIROC6.historical.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.1hrPt/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-KIT.MIROC6.historical.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.1hrPt"

174

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-KIT.MIROC6.historical.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.fx/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-KIT.MIROC6.historical.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.fx"

175

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-KIT.MIROC6.historical.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.1hr/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-KIT.MIROC6.historical.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.1hr"

176

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-KIT.MIROC6.historical.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.6hr/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-KIT.MIROC6.historical.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.6hr"

177

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-KIT.MIROC6.ssp126.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.mon/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-KIT.MIROC6.ssp126.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.mon"

178

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-KIT.MIROC6.ssp126.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.6hrPt/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-KIT.MIROC6.ssp126.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.6hrPt"

179

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-KIT.MIROC6.ssp126.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.day/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-KIT.MIROC6.ssp126.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.day"

180

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-KIT.MIROC6.ssp126.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.1hrPt/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-KIT.MIROC6.ssp126.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.1hrPt"

181

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-KIT.MIROC6.ssp126.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.fx/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-KIT.MIROC6.ssp126.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.fx"

182

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-KIT.MIROC6.ssp126.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.1hr/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-KIT.MIROC6.ssp126.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.1hr"

183

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-KIT.MIROC6.ssp126.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.6hr/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-KIT.MIROC6.ssp126.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.6hr"

184

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-KIT.MIROC6.ssp370.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.mon/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-KIT.MIROC6.ssp370.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.mon"

185

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-KIT.MIROC6.ssp370.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.6hrPt/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-KIT.MIROC6.ssp370.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.6hrPt"

186

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-KIT.MIROC6.ssp370.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.day/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-KIT.MIROC6.ssp370.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.day"

187

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-KIT.MIROC6.ssp370.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.1hrPt/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-KIT.MIROC6.ssp370.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.1hrPt"

188

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-KIT.MIROC6.ssp370.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.fx/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-KIT.MIROC6.ssp370.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.fx"

189

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-KIT.MIROC6.ssp370.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.1hr/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-KIT.MIROC6.ssp370.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.1hr"

190

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/cordex-cmip6.DD.EUR-12.CLMcom-KIT.MIROC6.ssp370.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.6hr/stac"

- type "application/json"

- title "cordex-cmip6.DD.EUR-12.CLMcom-KIT.MIROC6.ssp370.r1i1p1f1.ICON-CLM-202407-1-1.v1-r1.6hr"

191

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/icon-epoc.control-1990.v20250325.atmos.native.2d_6h_inst/stac"

- type "application/json"

- title "icon-epoc.control-1990.v20250325.atmos.native.2d_6h_inst"

192

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/icon-epoc.control-1990.v20250325.atmos.native.2d_daily_max/stac"

- type "application/json"

- title "icon-epoc.control-1990.v20250325.atmos.native.2d_daily_max"

193

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/icon-epoc.control-1990.v20250325.atmos.native.2d_daily_mean/stac"

- type "application/json"

- title "icon-epoc.control-1990.v20250325.atmos.native.2d_daily_mean"

194

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/icon-epoc.control-1990.v20250325.atmos.native.2d_daily_min/stac"

- type "application/json"

- title "icon-epoc.control-1990.v20250325.atmos.native.2d_daily_min"

195

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/icon-epoc.control-1990.v20250325.atmos.native.2d_monthly_mean/stac"

- type "application/json"

- title "icon-epoc.control-1990.v20250325.atmos.native.2d_monthly_mean"

196

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/icon-epoc.control-1990.v20250325.atmos.native.mon/stac"

- type "application/json"

- title "icon-epoc.control-1990.v20250325.atmos.native.mon"

197

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/icon-epoc.control-1990.v20250325.atmos.native.pl_6h_inst/stac"

- type "application/json"

- title "icon-epoc.control-1990.v20250325.atmos.native.pl_6h_inst"

198

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/icon-epoc.control-1990.v20250325.land.native.2d_daily_mean/stac"

- type "application/json"

- title "icon-epoc.control-1990.v20250325.land.native.2d_daily_mean"

199

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/icon-epoc.control-1990.v20250325.land.native.2d_monthly_mean/stac"

- type "application/json"

- title "icon-epoc.control-1990.v20250325.land.native.2d_monthly_mean"

200

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/icon-epoc.control-1990.v20250325.land.native.mon/stac"

- type "application/json"

- title "icon-epoc.control-1990.v20250325.land.native.mon"

201

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/icon-epoc.control-1990.v20250325.ocean.native.2d_daily_mean/stac"

- type "application/json"

- title "icon-epoc.control-1990.v20250325.ocean.native.2d_daily_mean"

202

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/icon-epoc.control-1990.v20250325.ocean.native.2d_monthly_mean/stac"

- type "application/json"

- title "icon-epoc.control-1990.v20250325.ocean.native.2d_monthly_mean"

203

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/icon-epoc.control-1990.v20250325.ocean.native.2d_monthly_square/stac"

- type "application/json"

- title "icon-epoc.control-1990.v20250325.ocean.native.2d_monthly_square"

204

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/icon-epoc.control-1990.v20250325.ocean.native.age_monthly_mean/stac"

- type "application/json"

- title "icon-epoc.control-1990.v20250325.ocean.native.age_monthly_mean"

205

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/icon-epoc.control-1990.v20250325.ocean.native.eddy_monthly_mean/stac"

- type "application/json"

- title "icon-epoc.control-1990.v20250325.ocean.native.eddy_monthly_mean"

206

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/icon-epoc.control-1990.v20250325.ocean.native.layers_monthly_mean/stac"

- type "application/json"

- title "icon-epoc.control-1990.v20250325.ocean.native.layers_monthly_mean"

207

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/icon-epoc.control-1990.v20250325.ocean.native.mon/stac"

- type "application/json"

- title "icon-epoc.control-1990.v20250325.ocean.native.mon"

208

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/tape_test/stac"

- type "application/json"

- title "tape_test"

209

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/hadgem3-gc5-n216-orca025.eerie-picontrol.atmos.gr025.daily/stac"

- type "application/json"

- title "hadgem3-gc5-n216-orca025.eerie-picontrol.atmos.gr025.daily"

210

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/hadgem3-gc5-n216-orca025.eerie-picontrol.atmos.native.atmos_daily_center/stac"

- type "application/json"

- title "hadgem3-gc5-n216-orca025.eerie-picontrol.atmos.native.atmos_daily_center"

211

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/hadgem3-gc5-n216-orca025.eerie-picontrol.atmos.native.atmos_daily_edge/stac"

- type "application/json"

- title "hadgem3-gc5-n216-orca025.eerie-picontrol.atmos.native.atmos_daily_edge"

212

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/hadgem3-gc5-n216-orca025.eerie-picontrol.atmos.native.atmos_monthly_aermon/stac"

- type "application/json"

- title "hadgem3-gc5-n216-orca025.eerie-picontrol.atmos.native.atmos_monthly_aermon"

213

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/hadgem3-gc5-n216-orca025.eerie-picontrol.atmos.native.atmos_monthly_amon_center/stac"

- type "application/json"

- title "hadgem3-gc5-n216-orca025.eerie-picontrol.atmos.native.atmos_monthly_amon_center"

214

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/hadgem3-gc5-n216-orca025.eerie-picontrol.atmos.native.atmos_monthly_amon_edge/stac"

- type "application/json"

- title "hadgem3-gc5-n216-orca025.eerie-picontrol.atmos.native.atmos_monthly_amon_edge"

215

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/hadgem3-gc5-n216-orca025.eerie-picontrol.atmos.native.atmos_monthly_emon/stac"

- type "application/json"

- title "hadgem3-gc5-n216-orca025.eerie-picontrol.atmos.native.atmos_monthly_emon"

216

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/hadgem3-gc5-n216-orca025.eerie-picontrol.ocean.gr025.daily/stac"

- type "application/json"

- title "hadgem3-gc5-n216-orca025.eerie-picontrol.ocean.gr025.daily"

217

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/hadgem3-gc5-n216-orca025.eerie-picontrol.ocean.gr025.monthly/stac"

- type "application/json"

- title "hadgem3-gc5-n216-orca025.eerie-picontrol.ocean.gr025.monthly"

218

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/hadgem3-gc5-n640-orca12.eerie-picontrol.atmos.gr025.daily/stac"

- type "application/json"

- title "hadgem3-gc5-n640-orca12.eerie-picontrol.atmos.gr025.daily"

219

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/hadgem3-gc5-n640-orca12.eerie-picontrol.atmos.native.atmos_daily_center/stac"

- type "application/json"

- title "hadgem3-gc5-n640-orca12.eerie-picontrol.atmos.native.atmos_daily_center"

220

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/hadgem3-gc5-n640-orca12.eerie-picontrol.atmos.native.atmos_daily_edge/stac"

- type "application/json"

- title "hadgem3-gc5-n640-orca12.eerie-picontrol.atmos.native.atmos_daily_edge"

221

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/hadgem3-gc5-n640-orca12.eerie-picontrol.atmos.native.atmos_monthly_aermon/stac"

- type "application/json"

- title "hadgem3-gc5-n640-orca12.eerie-picontrol.atmos.native.atmos_monthly_aermon"

222

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/hadgem3-gc5-n640-orca12.eerie-picontrol.atmos.native.atmos_monthly_amon_center/stac"

- type "application/json"

- title "hadgem3-gc5-n640-orca12.eerie-picontrol.atmos.native.atmos_monthly_amon_center"

223

- rel "child"

- href "https://eerie.cloud.dkrz.de/datasets/hadgem3-gc5-n640-orca12.eerie-picontrol.atmos.native.atmos_monthly_amon_edge/stac"

- type "application/json"